Camera stream in Web

Nowadays, almost every phone or laptop has a built-in camera. In this article, we’re going to focus on the enhancement it can bring to the web usage of our devices. We will mainly talk about video and keep the audio for another time.

With HTML5 and the development of WebRTC, camera use in a web environment has become easier. Indeed before HTML5, in a browser, a video could only be played with a plug-in (sounds old, right?). Now it’s easier, even though browsers adaptation stays a restraint.

Section intitulée state-of-the-artState of the art

In the first place, access to webcam stream was used in artistic or event websites. A lot of projects in the beginning of creative development were based on the camera. Some of them are outdated because of web api or browsers behaviors changes. The Media Stream API has changed the ways of getting data. The access to phone or laptop components through browsers also evolved.

Accessing the video output also means being able to modify it. We can find some “still working” examples:

Thanks to the access to the pixel composition of one frame through a canvas element, the camera output can be transformed.

This first step was a great one but camera had a lot more to offer than just streaming.

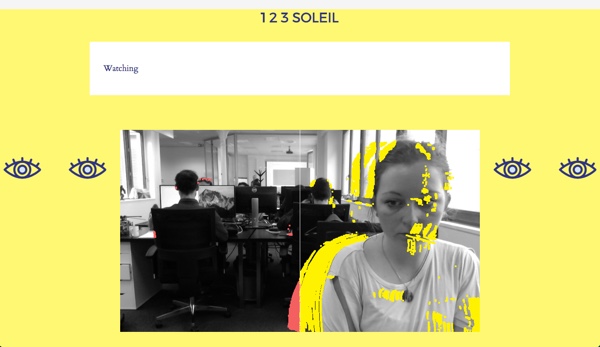

Images can be processed with a difference blending, which makes it really convenient to see motion between two frames. You can get the coordinates of every pixel that changed. It means that it’s not only possible to print or modify the camera output but you can also physically interact with it.

Nevertheless the technology was running slowly because of a lack of projects and diversity. The next step is the arrival of face/mouth/lips detection.

In the web field, face tracking libraries started to develop, accompanied with lips and eyes movement / recognition. Face detection became trendy thanks to the Snapchat app (filters) and motion detection with the kinect.

In phone apps it led to the creation of games.

TrackingJS is a library including all kinds of interactions I’ve listed and even more (color / border tracking):

![]()

All those ways to interact were the starting point for projects in domains such as marketing, social network, security, online try and sale…

But movement tracking seems quite incomplete. We now have all the data we need from the camera and good algorithms to track it but what’s still missing is the full and fast analysis of the image. It could be useful to detect an object, an expression or a surface.

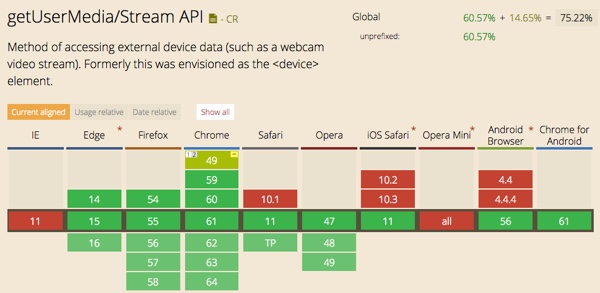

Another issue going on is the late support in browsers (IE et Safari). On mobile, Android was first in February 2017. iOS was the last because WebRTC was not supported until September 19th. Indeed, iOS 11 has been launched and with it Safari 11 is supporting video stream 🎉, Firefox/Chrome for iOS will too.

With the evolution of 3D in web and devices capacities, it is also possible to use the video stream to make a 3D output.

Section intitulée how-do-i-get-the-dataHow do I get the data?

The Media Capture and Streams API, often called the Media Stream API or the Stream API, is an API related to WebRTC which supports streams of audio or video data, the methods for working with them, the constraints associated with the type of data, the success and error callbacks when using the data asynchronously, and the events that are fired during the process. The output of the MediaStream object is linked to a consumer. It can be a media elements, like <audio> or <video>, the WebRTC RTCPeerConnection API or a Web Audio API MediaStreamAudioDestinationNode.

Section intitulée basic-code-to-get-the-streamBasic code to get the stream

const constraints = {

audio: true,

video: {

width: { min: canvasWidth },

height: { min: canvasHeight }

}

}

if (typeof navigator.mediaDevices === 'undefined') {

navigator.mediaDevices = {};

}

if (typeof navigator.mediaDevices.getUserMedia === 'undefined') {

navigator.mediaDevices.getUserMedia = function(constraints) {

// First get ahold of the legacy getUserMedia, if present

var getUserMedia = navigator.webkitGetUserMedia || navigator.mozGetUserMedia;

// Some browsers just don't implement it - return a rejected promise with an error

// to keep a consistent interface

if (!getUserMedia) {

return Promise.reject(new Error('getUserMedia is not implemented in this browser'));

}

// Otherwise, wrap the call to the old navigator.getUserMedia with a Promise

return new Promise(function(resolve, reject) {

getUserMedia.call(navigator, constraints, resolve, reject);

});

}

}

navigator.mediaDevices

.getUserMedia(constraints)

.then(initSuccess)

.catch(function(err) {

console.log(err.name + ": " + err.message);

});

function initSuccess(requestedStream) {

var video = document.querySelector('video');

// Older browsers may not have srcObject

if ("srcObject" in video) {

video.srcObject = requestedStream;

} else {

// Avoid using this in new browsers, as it is going away.

video.src = window.URL.createObjectURL(stream);

}

video.onloadedmetadata = function(e) {

video.play();

};

}

Section intitulée analyse-video-data-with-canvasAnalyse video data with canvas

let isReadyToDiff = false;

capture(video) {

this.captureContext.drawImage(video, 0, 0, canvasWidth, canvasHeight);

var captureImageData = this.captureContext.getImageData(0, 0, this.canvasWidth, this.canvasHeight );

this.motionContext.putImageData(captureImageData, 0, 0);

// diff current capture over previous capture, leftover from last time

this.diffContext.globalCompositeOperation = 'difference';

this.diffContext.drawImage( video, 0, 0, canvasWidth, canvasHeight );

var diffImageData = this.diffContext.getImageData( 0, 0, canvasWidth, canvasHeight );

if (isReadyToDiff) {

let rgba = diffImageData.data;

// pixel adjustments are done by reference directly on diffImageData

for (let i = 0; i < rgba.length; i += 4) {

const pixelDiff = rgba[i] * 0.6 + rgba[i + 1] * 0.6 + rgba[i + 2] * 0.6;

if (pixelDiff >= this.pixelDiffThreshold) {

// do what you want with the data

}

}

}

// draw current capture normally over diff, ready for next time

this.diffContext.globalCompositeOperation = 'source-over';

this.diffContext.drawImage( video, 0, 0, canvasWidth, canvasHeight );

this.isReadyToDiff = true;

}

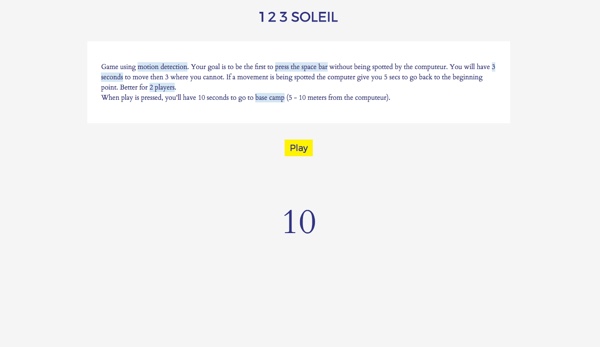

Using this code, I made a prototype based on motion detection. I recreated the child game ‘red light / green light’ (1️⃣ 2️⃣ 3️⃣ ☀️ in French) in which you have to get to a finish point without being spotted. You can progress to this finish line by sequence of 3 seconds.

Here are the Github repository and the demo.

Section intitulée possible-usesPossible uses

- Track user behavior

- Games

- Security apps (motion detection with a camera linked to a Raspberry Pi)

- Presence Detection

- Interactive background

- Take a picture on Move

- Glasses/Hat try (haircut…)

- Screen unlock

- Controlling computer without touching

Section intitulée what-s-next-new-trendsWhat’s next / new trends

Stream API and WebRTC are finally more and more sustained and everything is starting to move faster.

Curently, two technologies are making Web use of the Camera trendy again.

First, deep and machine learning, methods already used by Google, IBM, Microsoft, Amazon, Adobe, Yandex or Baidu. Recognizing a gesture or an object is becoming easier in a web based environment thanks to new APIs. Possibilities are increasing fast thanks to improvements in artificial intelligence domain. New projects are starting to grow from those APIs, games for example.

It allows new ideas and interactions with the camera:

- Adding Sounds To Silent Movies

- Translate text on an image

- Automatic image description

The second one is augmented reality. Currently, AR experiments are mostly disponible on mobile apps, but soon you will have the possibility to open an AR website. WebRTC on Iphone is a step in this direction. This will have a huge impact on our devices uses and bring new possibilities. For example, instead of having a map, you can just look around you and find what you want.

AR first developed through apps.

Developers around the globe are already working on libraries that will help building web AR projects. We will play with one of them in a next article: AR.js. iOS 11 and ARKit arrivals show that we are now heading to more projects based on this technology.

The 3D library Three.js is also very useful to build an AR web project, some work has already been done to help implement it in AR environment.

Section intitulée conclusionConclusion

Even though web usage of the camera had ups and downs, it always globally went in a positive way. Recently, stuffs started to move faster. We now have new possibilities, new APIs, devices capacities and browsers support. The only thing left is new projects, so let’s get to it.

Waiting for interactive AR websites ⏳💻 pic.twitter.com/k1ZrqxuNKc

— Anthony Antonellis (@a_antonellis) 25 septembre 2017

Section intitulée referencesReferences

Commentaires et discussions

Ces clients ont profité de notre expertise

Nous avons également effectué en quelques semaines la refonte de l’application mobile de Dromadaire, à l’aide de la plateforme Appcelerator Titanium et du framework Alloy. Dialoguant avec les APIs REST développées par les équipes internes de Dromadaire, cette application internationalisée est disponible à la fois sur iOS et Android, et permet d’effectuer…

L’équipe d’Alain Afflelou a choisi JoliCode comme référent technique pour le développement de son nouveau site internet. Ce site web-to-store incarne l’image premium de l’enseigne, met en valeur les collections et offre aux clients de nouvelles expériences et fonctionnalités telles que l’e-réservation, le store locator, le click & collect et l’essayage…

L’équipe de Finarta a fait appel à JoliCode pour le développement de leur plateforme Web. Basée sur le framework Symfony 2, l’application est un réseau privé de galerie et se veut être une place de communication et de vente d’oeuvres d’art entre ses membres. Pour cela, de nombreuses règles de droits ont été mises en places et une administration poussée…