How to copy an Elasticsearch index from the production to a local Docker container

I faced an issue with Elasticsearch last week, and in order to reproduce it, I wanted to have the full index on my development machine.

To do that, I have some options:

- Use a backup: but I only want one indice. It would be too heavy to download the whole backup. Moreover it does not fit on my laptop;

- Create a new snapshot: I don’t want to edit production configuration;

- Export the index as JSON and re-index it: I’ll need too much manual code, and it will be too slow;

- Use the reindex API with a remote source (production) and a local destination (my development environment): that’s what we’re gonna use.

Section intitulée our-setupOur setup

Section intitulée in-productionIn production

- The cluster runs on bare-metal;

- The Elasticsearch cluster is not directly reachable over HTTP;

- The servers are not even reachable over SSH;

- We have a bastion to protect our infrastructure.

Section intitulée in-developmentIn development

- The Elasticsearch node is in a docker container.

Section intitulée the-reindex-apiThe reindex API

I will use the reindex API.

This API allows us to copy an index to another index. And what is cool, is that it allows us to copy data from a remote cluster.

The syntax is something similar to:

POST _reindex

{

"source": {

"remote": {

"host": "http://otherhost:9200",

"username": "user",

"password": "pass"

},

"index": "my-index-000001"

},

"dest": {

"index": "my-new-index-000001"

}

}

We will use the development node to initiate the reindex. It means we will run the HTTP request on the Docker container.

Section intitulée how-to-expose-the-production-to-a-local-containerHow to expose the production to a local container

Since there are many security to pass through, we will use an SSH tunnel to expose the production cluster to the local container.

So we need to:

- open an SSH connection to the production cluster: keys

HostNameandUser; - by using the bastion: key

ProxyJump; - configure the bastion for the “proxy jump”: second part of the config;

- bind port 9200 (on the production) to 9201 (on our host): key

LocalForward; - bind 0.0.0.0 on our host, instead of 127.0.0.1 to allow our container to reach the tunnel: key

GatewayPorts; - disable TTY because it’s not needed: key

RequestTTY; - display a nice message when opening the connection: key

RemoteCommand; - use a nice name for the connection: main key

Host.

All the configurations together:

# .ssh/config

Host project-prod-tunnel-es

ProxyJump project-prod-bastion-1

Hostname 10.20.0.243

User debian

LocalForward 9201 10.20.0.243:9200

RequestTTY no

GatewayPorts true

RemoteCommand echo "curl http://127.0.0.1:9201" && cat

Host project-prod-bastion-1

Hostname 1.2.3.4

User debian

Note: Our elasticsearch nodes are not listening to 127.0.0.1, but the local IP.

That’s why the LocalForward uses 10.20.0.243 and not 127.0.0.1.

WARNING: When you use LocalForward you are opening the big security

breach in your production cluster: all computers in your network, containers

on your computer, applications will be able to reach the production. This risk

should be treated very carefully!

You will also need to have your SSH key installed on your servers. And to open the tunnel, you must run the following command:

ssh project-prod-tunnel-es

Section intitulée how-to-start-the-reindexHow to start the reindex

Section intitulée configuration-of-the-local-nodeConfiguration of the local node

The remote reindex server should be “whitelisted” on your elasticsearch configuration. The remote host will be your host Docker IP. It’s usually the container gateway. You can find it with the following command:

docker inspect -f '{{range .NetworkSettings.Networks}}{{.Gateway}}{{end}} <container_id>'

Once you get the IP, you must allow it in the configuration

# /etc/elasticsearch/elasticsearch.yml

reindex.remote.whitelist: "172.21.0.1:9201"

Don’t forget to rebuild & up again the container

Section intitulée start-the-reindexStart the reindex

Once you are done, you can execute the following HTTP request to start the task

POST _reindex?wait_for_completion=false

{

"source": {

"index": "my_index_to_debug",

"remote": {

"host": "http://172.21.0.1:9201"

},

"size": 10

},

"dest": {

"index": "my_index_to_debug"

}

}

This request will return a task ID. In my case: fLDgREJ0S46ETKfmPnRtHw:7330

- I disabled

wait_for_completion, because the index is about 10Gb; - You can monitor the progress thanks to the task API:

GET _tasks/fLDgREJ0S46ETKfmPnRtHw:7330; - I used a size of 10, because with a bigger value, I hit some memory limit on my local node, as said in the documentation;

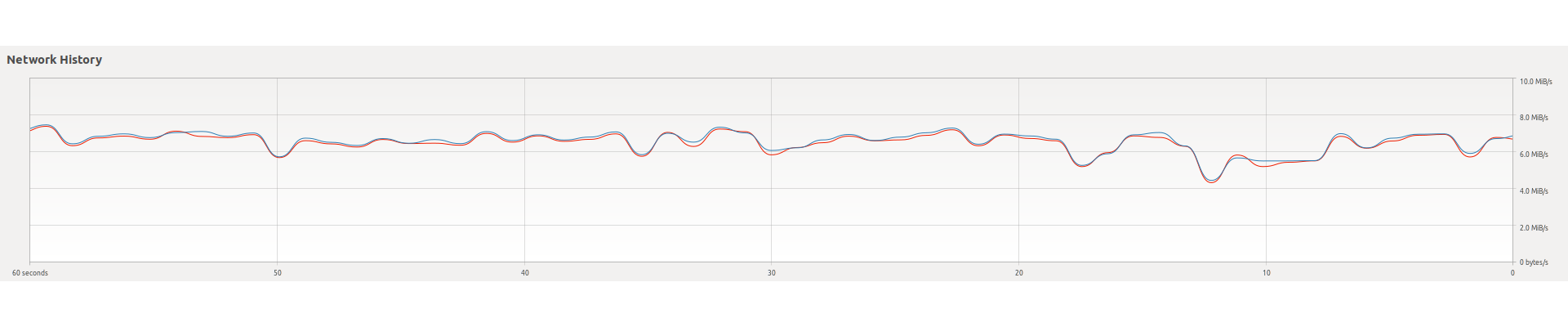

- The bandwidth with this configuration is about 7.0MiB/s;

- It took about 1 hour to transfer 10Gb of data.

Section intitulée conclusionConclusion

With very few configuration (1 line in the Dockerfile and few lines in our

.ssh/config) we manage to call the production cluster from a

development container.

This blog post was about Elasticsearch, but It would be exactly the same for other TCP services, like PostgreSQL, RabbitMQ, or Redis.

And don’t forget to close your tunnel once you’re done!

Commentaires et discussions

Nos formations sur ce sujet

Notre expertise est aussi disponible sous forme de formations professionnelles !

Elasticsearch

Indexation et recherche avancée, scalable et rapide avec Elasticsearch

Ces clients ont profité de notre expertise

Afin de poursuivre son déploiement sur le Web, Arte a souhaité être accompagné dans le développement de son API REST “OPA” (API destinée à exposer les programmes et le catalogue vidéo de la chaine). En collaboration avec l’équipe technique Arte, JoliCode a mené un travail spécifique à l’amélioration des performances et de la fiabilité de l’API. Ces…

Qobuz nous a également sollicité pour la refonte de son moteur de recherche pour employer Elasticsearch, dont JoliCode a une très forte expertise. L’indexation en temps réel et les problématique de droits sur les contenus musicaux ont été les principales difficultés rencontrées. Au final, l’emploi d’ElasticSearch et notre approche technique ont permis…

Après avoir monté une nouvelle équipe de développement, nous avons procédé à la migration de toute l’infrastructure technique sur une nouvelle architecture fortement dynamique à base de Symfony2, RabbitMQ, Elasticsearch et Chef. Les gains en performance, en stabilité et en capacité de développement permettent à l’entreprise d’engager de nouveaux marchés…